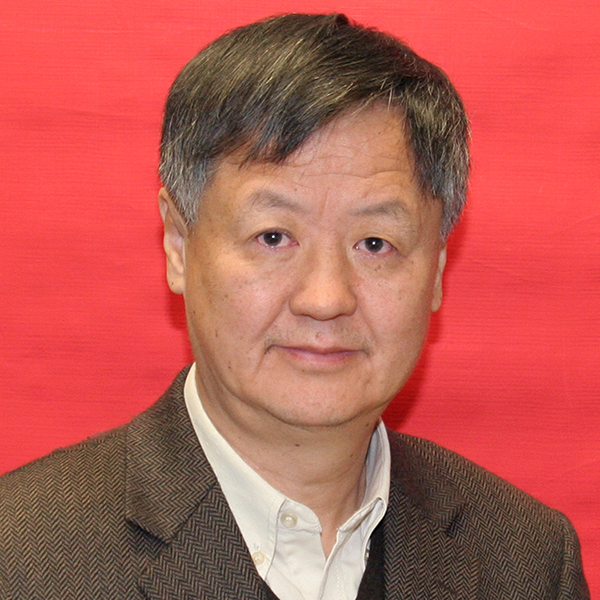

Jun Wang is the Chair Professor of Computational Intelligence in the Department of Computer Science and School of Data Science at City University of Hong Kong. Prior to this position, he held various academic positions at Dalian University of Technology, Case Western Reserve University, University of North Dakota, and the Chinese University of Hong Kong. He also held various short-term visiting positions at USAF Armstrong Laboratory, RIKEN Brain Science Institute, and Shanghai Jiao Tong University. He received a B.S. degree in electrical engineering and an M.S. degree from Dalian University of Technology and his Ph.D. degree from Case Western Reserve University. He was the Editor-in-Chief of the IEEE Transactions on Cybernetics. He is an IEEE Life Fellow, IAPR Fellow, and a foreign member of Academia Europaea. He is a recipient of the APNNA Outstanding Achievement Award, IEEE CIS Neural Networks Pioneer Award, and IEEE SMCS Norbert Wiener Award, among other distinctions.

The past four decades witnessed the birth and growth of neurodynamic optimization, which has emerged as a potentially powerful problem-solving tool for constrained optimization due to its inherent nature of biological plausibility and parallel and distributed information processing. Despite the success, almost all existing neurodynamic approaches a few years ago worked well only for optimization problems with convex or generalized convex functions. Effective neurodynamic approaches to optimization problems with nonconvex functions and discrete variables are rarely available. In this talk, a collaborative neurodynamic optimization framework will be presented. Multiple neurodynamic optimization models with different initial states are employed in the framework for scatter local search. In addition, a meta-heuristic rule in swarm intelligence (such as PSO) is used to reposition neuronal states upon their local convergence to escape local minima toward global optima. Experimental results will be elaborated to substantiate the efficacy of several specific paradigms in this framework for nonnegative matrix factorization, supervised learning, vehicle-task assignment, portfolio selection, and energy load dispatching.